1 Introduction

In engineering practice, the data we get may contain a large number of missing values, repeated values, outliers, etc., and a large amount of noise. It may also be that there are outliers due to manual input errors, which is not conducive to train of algorithm model. For the task of data preprocessing, it is commonly said that there are 4 steps:

- data cleaning

- data integration

- data transformation

- data optimization

2 Data Cleansing

2.1 Remove unique attributes

The unique attributes are usually id attributes, which cannot describe the distribution of the sample, so they can be deleted.

2.2 Handle missing values

-

Delete those features containing missing values: it is applicable to an attribute containing a large number of missing values, with the missing rate greater than 80%.

-

Missing value filling: based on data distribution, modeling prediction, interpolation, maximum likelihood estimation.

-

Fill according to data distribution:if the data conforms to uniform distribution, fill the gap with the mean value of the variable or the homogeneous mean value of the variable; if the data has skewed distribution, fill the gap with the median. -

Modeling prediction filling:the data set is divided into two categories according to whether the missing value contains a specific attribute, the missing attribute is predicted as the prediction target, then the missing value can be predicted by using the existing machine learning algorithm (regression, Bayesian, random forest, decision tree and other models). (The flaw of this approach is that the predicted result is meaningless if the other attributes are unrelated to the missing attribute; However, if the prediction result is quite accurate, it shows that the missing attribute is not necessary to be included in the data set because their high relationship. The general case is somewhere in between the above two cases.) -

Interpolation filling:including random interpolation, multiple interpolation, Lagrange interpolation, Newton interpolation, manual filling and so on.- Multiple interpolation considers that the value to be interpolated is random. In practice, it usually estimates the value to be interpolated and adds different noises to form multiple groups of alternative interpolation values. According to some selection basis, the most appropriate interpolation value is selected.

- The interpolation process only supplements the unknown value with our subjective estimate, which does not necessarily conform to the objective fact.In many cases, it is better to manually interpolate missing values based on your understanding of the domain.

-

To sum up, the missing proportion of the variable is first detected by using pandas.isnull.sum(), and then deletion or filling is considered. If the variable to be filled is continuous, mean method and interpolation method are generally used for filling; if the variable is discrete, median or modeling prediction are usually used for filling.

2.3 Outlier handling

Outliers are usually defined as abnormal or noisy, which refers to those data outside a particular distribution range. Exceptions can be divided into two types: “pseudo-exceptions”, which are generated by specific business operation actions and normally reflect the state of the business rather than the anomaly of the data itself; “True anomalies” are not caused by specific business operations, but rather anomalies in the distribution of data, namely outliers.

Outlier detection method:

-

Based on simple statistical analysis: determine whether there are exceptions based on boxplot and each point. For example, “Describe function” in Pandas can quickly find outliers.

-

Based on the principle of 3σ: If the data has a normal distribution and is far from the mean value, the points within the range of P( x-u > 3σ) <= 0.003 are generally defined as outliers. -

Based on distance: By defining the proximity measure between objects, the exception object can be judged according to the distance whether it is far away from other objects. The disadvantage is that the computational complexity is high and it is not suitable for large data sets and data sets with different density regions.

-

Density-based: The local density of outliers is significantly lower than that of most neighbor points, which is suitable for non-uniform data sets.

- Clustering based: The clustering algorithm is used to discard the small clusters that are far away from other clusters.

Methods of outlier treatment

-

Considering whether to delete this record according to the number and impact of outliers, information loss is high.

-

If the outliers are eliminated after the log-scale logarithmic transformation of the data, then this method is effective without loss of information.

-

The mean or median replaces the outlier, which is simple and efficient with less loss of information.

-

In the training of tree model, the tree model has higher robustness to outliers without information loss and does not affect the training effect of the model.

3 Data integration

Data integration is mainly to increase the amount of sample data. The main methods are as follows:

-

Data consolidation: Physically import data together into the same data store. This usually involves data warehousing technology.

-

Data propagation: Data is copied from one location to another using an application called “data propagation. “This procedure can be performed synchronously or asynchronously and is an event-driven operation.

-

Data virtualization: Use interfaces to provide a unified, real-time view of data from many different sources. Data can be viewed from a single point of access.

4 Data transformation

Data transformation includes normalization, discretization and sparse processing of data to achieve the purpose of mining.

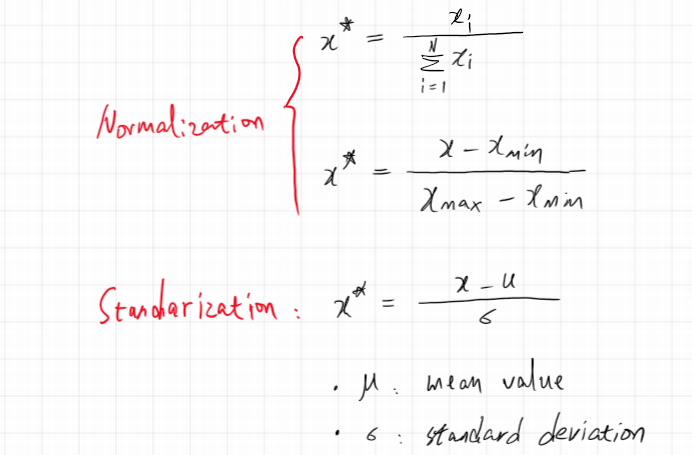

4.1 Normalization and Standarization

Standardized and normalized data: dimensionality of different features in the data may be inconsistent, and the difference between values may be very large. Without processing, the results of data analysis may be affected. Therefore, it is necessary to scale the data in a certain proportion to make it fall in a specific area for comprehensive analysis. In particular, the distance-based methods, clustering, KNN and SVM must be normalized.

-

Why: It is necessary to eliminate the influence of different magnitudes of different attributes in samples: The difference of orders of magnitude will lead to the dominant position of the larger magnitude of attributes;The difference of order of magnitude will lead to slow down the convergence speed of iteration;Algorithms that depend on the sample distance are very sensitive to the order of magnitude of the data.

-

How?

-

Example:

input standardized normalized 0.0 -1.336306 0.0 1.0 -0.801784 0.2 2.0 -0.267261 0.4 3.0 0.267261 0.6 4.0 0.801784 0.8 5.0 1.336306 1.0

4.2 Sparse processing

-

For discrete and nominal variables that cannot be ordered LabelEncoder, variables are usually considered to be sparse and 0,1 dumb variable.

-

For example, the animal type variable contains four different values of cat, dog, pig and sheep, and the variable is converted into four dumb variables of is_ pig, is_ cat, is_ dog and is_ sheep. If there are many different values of variables, according to the frequency, the values with fewer occurrences can be uniformly classified into a class of ‘rare’. The sparse processing is not only conducive to the rapid convergence of the model, but also can improve the anti-noise ability of the model.

5 Data optimization

The purpose is to reduce the amount of data, reduce the dimension of data, delete redundant information, improve the accuracy of analysis, and reduce the amount of calculation by selecting important features. In a word, the two main reasons for feature selection are: (1) alleviate the dimension disaster; (2) reduce the difficulty of learning tasks. The methods are as follows:

-

Principal component analysis (PCA): PCA maps the current dimension to the lower dimension by means of spatial mapping so that the variance of each variable in the new space is maximized.

-

Singular value decomposition (SVD): SVD has a low interpretability of dimensionality reduction, and its computation is larger than PCA. SVD is generally used for dimensionality reduction on sparse matrices, such as image compression and recommendation system.

-

Clustering: Clustering a class of similar features into a single variable, thus greatly reducing the dimensions.